How I build my first python web scraper… for infinite scrolling web pages !

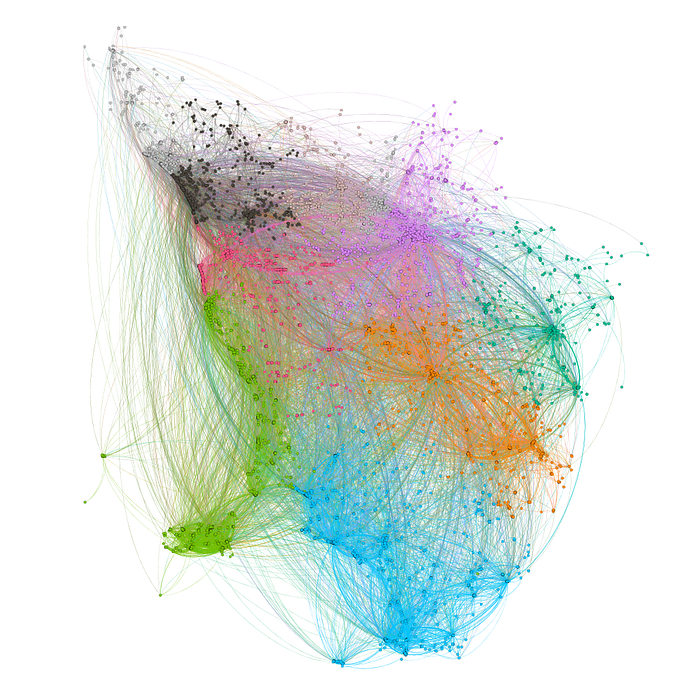

This story begin with my universitary course in Social Network Analysis. The assigned project consists in retrieving web data suitable to build a network, and measure the statistical characheteristic of the created net… even applying typical graph analysis such as community discovery and link prediction.

At the end my research fell on Trip Advisor, the famous social network for traveller and opinions. But that is another story (see the github page of the project).

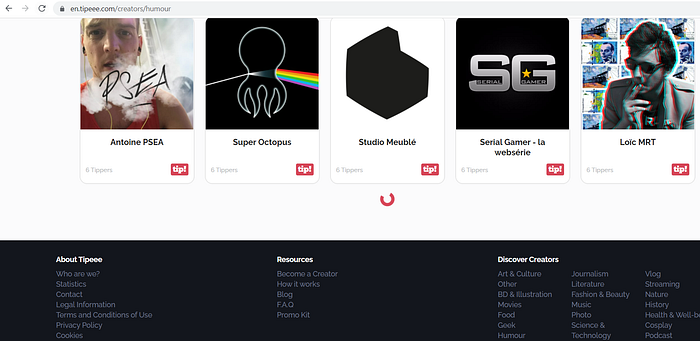

Today I want to tell you how I trained for this project, when trying to build a social network over the site tipeee.com I ended up implementing a python library to scrape this social network for contents creators. The library is available on pypi.

Truely this was my first published library and I used this practise even to understand how to package a python module… but what I want to describe here is how to scrape a website. In fact, the first part of the project required to build a web crawler and retrieve data from the world wide web. For this reason I found the opportunity to study python scraping libraries and understand how they works for various cases.

I learned that there are three main tools to scrape the web using python, each one with its characteristics…

- The fastest one is Scrapy (I used it for my research over Trip Advisor Tuscany Restaurants), but it is probably the most difficult to be used.

- The most versatile instead is Selenium. It uses a web driver and automate the web navigation. It allows to interact with every web page, triggering events, clicking buttons and scrolling the page. So it is possible even to scrape dynamic contents generated through javascript or other languages. For sure, scraping the web with this tool allows to get in depth in the page, but it will require more time.

- But if you don’t need the characteristics of these two, you can always rely on the requests library, or on urrlib, useful to call a particular URL or to recive an answer though an API call. The page content can be parsed using tools such as BeautifulSoap (in case of html text).

When the API is available and public, is probably the best mode to obtain information from the web page. You need to understand where to find it and how to use it. And this is the core of this story. While I was exploring the site, I found that given a particul category, an “infinite” scrollign web page is generated showing up all the creator for that category. Visit the page at this link to understand.

One possible approach to crawl all creators is to use Selenium… it can even scroll the page automatically. But for sure this is not the best or fastest approach. In fact, usually these pages are generated from a database, and in order to query it, the site itself use an internal API.

The question is… where to find the API ???

Open the developer tools and go to the “network” sheet. You see there that some actions (the scroll down of the page in this case) generate an API call. As you can see by the video, the response of the call is in JSON format and it contains a long list of contents creators. Instead the URL necessary to scrape the site is the following :

https://api.tipeee.com/v2.0/projects?mode=category&page=3&perPage=20&lang=en&category=37Analysing the URL, after the “?” we find interesting parameters:

- mode: while it is “category” it need to specify the param “category”

- page: it indicates the page we reach while scraping… in fact, infinite loop scrolling pages are based on a finite number of static pages containing each one a specific number of item.

- perPage: specify the number of item to show in each page, always this number has got a limit.

- lang: simply the language

- category: specify one category in which to scrape… each category has a number assigned, so you need to specify the number during the call; I found a mapping between categories and numbers.

Basing on these informations, I started to develope the library, importing two libraries:

- urllib.request is necessary to call the API

- json is necessary to parse the response

In the code below is given evidence over the categories… but overall is shown the main function of the script: I defined the function “requesting ”in order to call a general URL, then decode and parse the content of the response.

The remaining part of the library is constituted of Python Classes :

- Creator

- Creators

- Tipper

- Comment

In the code snippet is defined the Creators class, built as a list of Creator. It refer to the URL already shown… so, given a category the class allow to retrieve information for all creators of that category. If no category is passed, the method “Scrape” will retrieve all creators between all categories.

The method works in intuitive manner. The first step is to define define the paramethers described before. After that it use a “base url ” in which it is possible to insert the paramethers. So it will start a while loop with two exit conditions: the first one check if some limit is given by the user; the second one, just break the loop when all creator have been retrieved. In the loop, call after call, what does change is the param “page”. Each page contains a limited number of item, and to each page correspond a number. But we don not use an incremental number to take track of the pages… the preferred approach, that works in these kind of situations but even in other cases, is to search on the response something like “next”, “next_page”, “next_link”, etc.. for example in our case we see the json response contains an object called “pager”:

{items : [...]

pager: {itemNbr: 324, pageNbr: 17, currentPage: 3, next: 4, previous: 2}

}These object contains information regarding the current page, the number of item, the previous and the next page… using these we can iterate over pages adopting the best practise.

I think for today is sufficient… I will propose other scraper in the future, maybe showing example of use for Selenium or Scrapy. But my goal here is concluded , it has been to encourage people who need to scrape the web, to explore the page they are interested in. In particul my suggestion is to check the network sheet on the developer tool and look for the “secret” API. Just remember that the hugest site on internet never have got a public access API… but often they offer the possibility to request a personal “token”, to be passed in the API call as a paramether.

Thanks for the attention and stay tuned !!!

BYE

P.S. Visit the GitHub Repository ;)